|

SNABSuite

0.x

Spiking Neural Architecture Benchmark Suite

|

|

SNABSuite

0.x

Spiking Neural Architecture Benchmark Suite

|

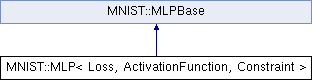

The standard densely connected multilayer Perceptron. Template arguments provide the loss function, the activation function of neurons (experimental) and a possible constraint for the weights. More...

#include <mnist_mlp.hpp>

Public Member Functions | |

| MLP (std::vector< size_t > layer_sizes, size_t epochs=20, size_t batchsize=100, Real learn_rate=0.01) | |

| Constructor for random init. More... | |

| MLP (Json &data, size_t epochs=20, size_t batchsize=100, Real learn_rate=0.01, bool random=false, Constraint constraint=Constraint()) | |

| Constructs the network from json file. The repo provides python scripts to create those from a keras network. More... | |

| Real | max_weight () const override |

| Return the largest weight in the network. More... | |

| Real | conv_max_weight (size_t layer_id) const override |

| Real | min_weight () const override |

| Return the smallest weight in the network. More... | |

| Real | max_weight_abs () const override |

| Return the largest absolute weight in the network. More... | |

| const size_t & | epochs () const override |

| const size_t & | batchsize () const override |

| const Real & | learnrate () const override |

| const mnist_helper::MNIST_DATA & | mnist_train_set () override |

| Returns reference to the train data. More... | |

| const mnist_helper::MNIST_DATA & | mnist_test_set () override |

| Returns reference to the test data. More... | |

| const std::vector< cypress::Matrix< Real > > & | get_weights () override |

| Return all weights in the form of weights[layer](src,tar) More... | |

| const std::vector< mnist_helper::CONVOLUTION_LAYER > & | get_conv_layers () override |

| Return all filter weights in the form of weights[x][y][depth][filter]. More... | |

| const std::vector< mnist_helper::POOLING_LAYER > & | get_pooling_layers () override |

| const std::vector< size_t > & | get_layer_sizes () override |

| Return the number of neurons per layer. More... | |

| const std::vector< mnist_helper::LAYER_TYPE > & | get_layer_types () override |

| void | scale_down_images (size_t pooling_size=3) override |

| Scale down the whole data set, reduces the image by a given factor in every dimension. More... | |

| bool | correct (const uint16_t label, const std::vector< Real > &output) const override |

| Checks if the output of the network was correct. More... | |

| virtual std::vector< std::vector< std::vector< Real > > > | forward_path (const std::vector< size_t > &indices, const size_t start) const override |

| Forward path of the network (–> inference) More... | |

| virtual Real | forward_path_test () const override |

| Forward path of test data. More... | |

| virtual void | backward_path (const std::vector< size_t > &indices, const size_t start, const std::vector< std::vector< std::vector< Real >>> &activations, bool last_only=false) override |

| implementation of backprop More... | |

| virtual void | backward_path_2 (const std::vector< uint16_t > &labels, const std::vector< std::vector< std::vector< Real >>> &activations, bool last_only=false) override |

| Implementation of backprop, adapted for usage in SNNs. More... | |

| size_t | accuracy (const std::vector< std::vector< std::vector< Real >>> &activations, const std::vector< size_t > &indices, const size_t start) override |

| Calculate the overall accuracy from the given neural network output. More... | |

| void | train (unsigned seed=0) override |

| Starts the full training process. More... | |

Static Public Member Functions | |

| static std::vector< Real > | mat_X_vec (const Matrix< Real > &mat, const std::vector< Real > &vec) |

| Implements matrix vector multiplication. More... | |

| static std::vector< Real > | mat_trans_X_vec (const Matrix< Real > &mat, const std::vector< Real > &vec) |

| Implements transposed matrix vector multiplication. More... | |

| static std::vector< Real > | vec_X_vec_comp (const std::vector< Real > &vec1, const std::vector< Real > &vec2) |

| Vector vector multiplication, component-wise. More... | |

| static void | update_mat (Matrix< Real > &mat, const std::vector< Real > &errors, const std::vector< Real > &pre_output, const size_t sample_num, const Real learn_rate) |

| Updates the weight matrix based on the error in this layer and the output of the previous layer. More... | |

Protected Member Functions | |

| void | load_data (std::string path) |

Protected Attributes | |

| std::vector< cypress::Matrix< Real > > | m_layers |

| std::vector< size_t > | m_layer_sizes |

| std::vector< mnist_helper::CONVOLUTION_LAYER > | m_filters |

| std::vector< mnist_helper::POOLING_LAYER > | m_pools |

| std::vector< mnist_helper::LAYER_TYPE > | m_layer_types |

| size_t | m_epochs = 20 |

| size_t | m_batchsize = 100 |

| Real | learn_rate = 0.01 |

| mnist_helper::MNIST_DATA | m_mnist |

| mnist_helper::MNIST_DATA | m_mnist_test |

| Constraint | m_constraint |

The standard densely connected multilayer Perceptron. Template arguments provide the loss function, the activation function of neurons (experimental) and a possible constraint for the weights.

Definition at line 241 of file mnist_mlp.hpp.

|

inline |

Constructor for random init.

| layer_sizes | list of #neurons beginning with input and ending with output layer |

| epochs | number of epochs to train |

| batchsize | mini batchsize before updating the weights |

| learn_rate | gradients are multiplied with this rate |

| constrain | constrains the weights during training, defaults to no constraint |

Definition at line 274 of file mnist_mlp.hpp.

|

inline |

Constructs the network from json file. The repo provides python scripts to create those from a keras network.

| data | json object containing the network information |

| epochs | number of epochs to train |

| batchsize | mini batchsize before updating the weights |

| learn_rate | gradients are multiplied with this rate |

| random | Use structure from Json, initialize weights random if true |

| constrain | constrains the weights during training, defaults to no constraint |

Definition at line 326 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Calculate the overall accuracy from the given neural network output.

| activations | output of forward path |

| start | the start index, uses images indices[start] until indices[start +batchsize -1] |

| activations | result of forward path |

Implements MNIST::MLPBase.

Definition at line 900 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

implementation of backprop

| indices | list of shuffled (?) indices |

| start | the start index, uses images indices[start] until indices[start +batchsize -1] |

| activations | result of forward path |

| last_only | true for last layer only training (Perceptron learn rule) |

Implements MNIST::MLPBase.

Definition at line 798 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Implementation of backprop, adapted for usage in SNNs.

| labels | vector containing labels of the given batch |

| activations | activations in the form of [layer][sample][neuron] |

| last_only | true for last layer only training (Perceptron learn rule) |

Implements MNIST::MLPBase.

Definition at line 848 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

|

inlineoverridevirtual |

Implements MNIST::MLPBase.

Definition at line 482 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Checks if the output of the network was correct.

| label | the correct neuron |

| output | the output of the layer |

Implements MNIST::MLPBase.

Definition at line 678 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

|

inlineoverridevirtual |

Forward path of the network (–> inference)

| indices | list of shuffled (?) indices |

| start | the start index, uses images indices[start] until indices[start +batchsize -1] |

Implements MNIST::MLPBase.

Definition at line 724 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Forward path of test data.

Implements MNIST::MLPBase.

Definition at line 760 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Return all filter weights in the form of weights[x][y][depth][filter].

Implements MNIST::MLPBase.

Definition at line 570 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Return the number of neurons per layer.

Implements MNIST::MLPBase.

Definition at line 585 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Implements MNIST::MLPBase.

Definition at line 590 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

|

inlineoverridevirtual |

Return all weights in the form of weights[layer](src,tar)

Implements MNIST::MLPBase.

Definition at line 559 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

|

inlineprotected |

Definition at line 254 of file mnist_mlp.hpp.

|

inlinestatic |

Implements transposed matrix vector multiplication.

| mat | the matrix to transpose: mat.rows() ==! vec.size() |

| vec | the vector |

Definition at line 636 of file mnist_mlp.hpp.

|

inlinestatic |

Implements matrix vector multiplication.

| mat | the matrix: mat.cols() ==! vec.size() |

| vec | the vector |

Definition at line 614 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Return the largest weight in the network.

Implements MNIST::MLPBase.

Definition at line 471 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Return the largest absolute weight in the network.

Implements MNIST::MLPBase.

Definition at line 520 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Return the smallest weight in the network.

Implements MNIST::MLPBase.

Definition at line 504 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Returns reference to the test data.

Implements MNIST::MLPBase.

Definition at line 549 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Returns reference to the train data.

Implements MNIST::MLPBase.

Definition at line 540 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Scale down the whole data set, reduces the image by a given factor in every dimension.

| pooling_size | the factor reducing the image |

Implements MNIST::MLPBase.

Definition at line 601 of file mnist_mlp.hpp.

|

inlineoverridevirtual |

Starts the full training process.

| seed | sets up the random numbers for image shuffling |

Implements MNIST::MLPBase.

Definition at line 926 of file mnist_mlp.hpp.

|

inlinestatic |

Updates the weight matrix based on the error in this layer and the output of the previous layer.

| mat | weight matrix. |

| errors | error vector in this layer |

| pre_output | output rates of previous layer |

| sample_num | number of samples in this batch == mini batchsize |

| learn_rate | the learn rate multiplied with the gradient |

Definition at line 696 of file mnist_mlp.hpp.

|

inlinestatic |

Vector vector multiplication, component-wise.

| vec1 | first vector |

| vec2 | second vector of size vec1.size() |

Definition at line 658 of file mnist_mlp.hpp.

|

protected |

Definition at line 250 of file mnist_mlp.hpp.

|

protected |

Definition at line 249 of file mnist_mlp.hpp.

|

protected |

Definition at line 260 of file mnist_mlp.hpp.

|

protected |

Definition at line 248 of file mnist_mlp.hpp.

|

protected |

Definition at line 245 of file mnist_mlp.hpp.

|

protected |

Definition at line 244 of file mnist_mlp.hpp.

|

protected |

Definition at line 247 of file mnist_mlp.hpp.

|

protected |

Definition at line 243 of file mnist_mlp.hpp.

|

protected |

Definition at line 251 of file mnist_mlp.hpp.

|

protected |

Definition at line 252 of file mnist_mlp.hpp.

|

protected |

Definition at line 246 of file mnist_mlp.hpp.

1.8.11

1.8.11